Some managers and L&D people just don’t seem to get it.

It reminds me of the remarkable insight of the author Aldous Huxley when he said “I see the best, but it’s the worse that I pursue”

The evidence has been around for a long time that formal training on detailed task and process-based activities in advance of the need to carry out the task or use the process is essentially useless.

The logic and evidence both point to the fact that the “we’re rolling out a new system, so we’ve got to train them all” approach employed by many (read ‘most’) organisations, and offered as a service by training suppliers across the globe, is both inefficient and fundamentally ineffective.

You might as well throw the money spent on these activities out the window. Actually, a better option would be to spend the diminishing L&D budget on approaches that do work. Not only would new rollouts and upgrades come into use more smoothly, but am prepared to bet that it would leave budget over to use for other things, or to take as savings (perish the thought!)

Even if you’ve never been involved in training for rollout and upgrade and then finding that users demand re-training or simply call the help desk as soon as go-live happens, it helps to be aware of some fundamental truths about this flawed model.

Truth 1: Too much information for any human to remember

Most pre go-live training is delivered through ILT or eLearning and is content-heavy. The instructional designers and SMEs feel the need to cover every possibly eventuality and load courses with scenarios, examples and other ‘just-in-case’ content.

I have seen multiple PowerPoint decks of 200-300 slides delivered over 2-3 days for CRM upgrades. Few humans can recall this amount of information for later use, or even a fraction of it. Maybe if they have photographic memories they can, but designing for photographic memories is not really a sensible strategy. The rest of us just park most of what we do remember at the end of the session in the ‘clear out overnight’ part of our brains.

And all those expensively-produced User Guides are simply a waste of the Earth’s limited natural resources. They tend to be too detailed, linear, full of grabs of screens that the user will never refer to, impossible to navigate, and the last thing people reach for when they need help in using a new system. They are far more likely to reach for the phone and call the Help Desk. Training User Guides are quintessentially shelfware. Usually the only time someone picks user guides off the shelf is to throw them in a bin (hopefully one marked ‘recycling’) during a clear-out or an office move.

Truth 2: Too much time between the training and use

Embedding knowledge in short-term memory and long-term memory are two very different processes. Even the information that can been recalled immediately after training – and that’s likely to be minimal – will be lost if it isn’t reinforced through practice within a few hours.

Practice and reinforcement are required for the neurological processes of conversion to long-term memory to occur – chemicals in the brain such as seratonin, cyclic AMP, and specific binding proteins do that job.

Do you think Tiger Woods’ brain retained the details of how to arrange his body to hit a ball 400 yards without practice and reinforcement?

Truth 3: Post-Training Drop-Off

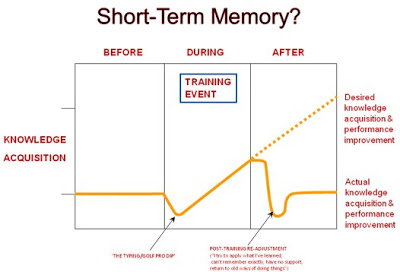

Harold Stolovitch & Erica Keeps carried out some very interesting research on desired vs. actual knowledge acquisition and performance improvement. The work uncovered some important observations.

The graph above shows the results. During the training event, following an initial dip – the ‘typing/golf pro dip’ – where performance drops as new ways of carrying out tasks are tried out, knowledge and performance then improve to the end of the training session. The individual walks out the door knowing more and being able to perform better than when they started the training.

Then the problems start.

The drop-off following the training event (called ‘post-training re-adjustment by Stolovitch and Keeps) can kick-in very quickly, possibly in a matter of hours. You finish a day’s training course, go home, sleep, and by the next morning a lot of what you had ‘learned’ has been cleaned out of your short-term memory. Bingo!

Then next day you get back to work and try to implement what you learned in the class. The trouble is, you can’t remember exactly what to do, you don’t have any support (that trainer who you called over to prompt you when you went through the exercises in class yesterday isn’t there), so you try a few things, find they don’t work (unless you’re lucky) and then you simply go back to doing what you did previously….

The result?

Performance improvement = zero

Value added by the training = zero

Return on investment = zero

Upwards – Following the Dotted Line

The only way knowledge retention and performance can follow the dotted line upwards is if plenty of reinforcement and practice immediately follows the training. Even better if this is accompanied by some form of support – from line managers setting goals and monitoring performance, from SMEs providing on-demand advice and support, or even from learning professionals providing workplace coaching.

An even better (and certainly cheaper) option is simply to cut out the training and replace it with a support environment from the start

Where Performance Support Trumps Training

There are some very good ePSS (electronic Performance Support Systems) or BPG (Business Process Guidance) tools available now. They are economic and generally straightforward to implement and trump training every time for following defined processes found in ERP and CRM systems and other software products

Just Like a GPS System

ePSS/BPG tools provide context-sensitive help at the point-of-need and “act like a GPS system rather than a roadmap” as Davis Frenkel , CEO of Panviva Inc., the company that produces the very impressive SupportPoint BPG tool, explains. “When you’re learning to follow a process, you just want to know the next 2-3 steps you need to take. You don’t want to have to remember the entire 20-30 process steps and all the options”, Frenkel says. I think he’s absolutely right and it’s a good analogy.

A GPS tells you that you need to ‘turn left at the next intersection’ or ‘take a right turn then keep straight ahead’. It instructs incrementally, and doesn’t tell you every turn on the journey when you set out.

When there’s no access to GPS and the driver has to revert to a map (and doesn’t have a flesh-and-blood GPS sitting beside them reading the map and instructing in increments) they will tend to read and memorise just the next 2-4 turns on the journey and then re-read the map to get the next set of instructions. Job done, destination reached.

So why don’t many organisations and L&D folk wake up to the failings of using the wrong approaches to achieve their required outcomes?

Why are millions of $/£/€/¥ spent every year training employees on using enterprise systems in this way when there’s evidence to prove that it simply doesn’t work?

ConsultMember of the Internet Time Alliance and Co-founder of the 70:20:10 Institute, Charles Jennings is a leading thinker and practitioner in learning, development and performance.

ConsultMember of the Internet Time Alliance and Co-founder of the 70:20:10 Institute, Charles Jennings is a leading thinker and practitioner in learning, development and performance.

It's amazing that after all these years of performance support being shown to work especially well with information technology systems that it's not part of the repertoire of the training profession. I've also saved hundreds of thousands of dollars for clients by advocating a performance support versus a training approach.

Actually, Return on Investment is negative, not zero. The whole exercise is like the fellow who hammers his head because it feels so great when he stops.

Of course you're right, Jay. There's always the opportunity cost to take into account.

One of the reasons we see this is that these trainings and manuals are the product of the documentation/training folks learning the system. These are their notes and how they have made sense of the beast. The true audience is lost.

I recently implemented an approach similar to what you describe using a Wiki as a performance support tool and it's not going as well as I would have hoped. I am finding it is almost as difficult to get employees using a performance support tool as it is getting them to use a manual. Either way they have to search for the answer unique to their situation and why search for your answer when you can just ask somebody else like the Helpdesk. I think for a performance support tool to be effective, it needs to be integrated into the software. Users need to be able to click the help button in the module of the software they are using and have it take them directly to the answer. I'm asking too much by asking them to go to the Wiki and search for the answer. Now, I either need to work with the software developers to have the support tool integrated into the software or continue with my relentless marketing of the support tool and hope it works. I wrote more about the problems I am having promoting the use of the support tool at the link below. Suggestions are gladly taken.

http://joedeegan.blogspot.com/2009/04/continuous-learning-experience.html

Joe – the key to a really effective performance support tool of the type you've attempted to mimic with a Wiki is that it provides context sensitivity (i.e. 'knows' where you are in a supported application and provides you with guidance only on that screen/field). You shouldn't need to go searching for the support you need – it should be presented to you.

The other characteristic of a good ePSS (performance support) tool is that it DOESN'T NEED to be integrated with the application (i.e. there shouldn't be any need to modify or interfere with the application at code level at all).

I think you're just asking for an on-going nightmare if you get some IT person to tinker with the application code to get the context sensitivity. There are a number of ePSS tools that provide context sensitivity without interfering with the target application code at all. Have a look at SupportPoint (mentioned in my post above) for one.

Came back to this article following the trainingzone feature, Charles. All good stuff, as always! Systems rollouts are a specific case of the "elephant in the room" – training delivered by the wrong people, to the wrong people, at the wrong time, in the wrong way. This equates to no learning, and no valued added to individual nor organisation.

Even a good training delivery by the right people to the right people (this is where most training measurement settles for fantastic feedback on happy sheets) if delivered at the wrong time in the wrong way, yields no learning in the long term and no added value.

One point though – if you can design e-learning (in the form of simulations) for delivery prior to go live – accepting that it does not mimic the live system exactly (80/20 rule applies) – you can achieve a great deal in terms of confidence building and familiarity with the underlying business processes the system embodies. This e-learning can then be quickly updated (using the right tools and resources) and used as performance support and ongoing induction for new staff. EPSS can then take the weight going forward.

Of course, nothing beats making a system intuitive to use in the first place. But then I'm now clearly asking too much of the world…

P.S. Charles, just put your blog on my blog roll…

Sorry this post comes so late (19 Dec. 2009) – when I was working with UNDP during their Peoplespft implementation they were using OnDemand Software from Global Knoweldge. OnDemand is embedded in Peoplesoft (Oracle) to provide several levels of performance support at the process level, including an option to walk the user through completing the process (e.g. Purchase Order.

The product info describes this as simulated transactional training and testing, as well as automated step-by-step instructions.

Oracle purchased Global Knowledge in 2008

I am currently involved in a large rollout of an internally managed enterprise level system that is core to the business. Formal training was demanded. My first question was about EPSS or embedded help systems. None. "No time" offered as the excuse.

I noted the issues "data dumping" and lack of access or application and limits of human memory and the importance of relevance. I also pointed out the issue of how guidance is most needed in the performance environment- learning IN the workstream.

As you might predict, the bad habits seemed more addictive than any drug on the planet. They all agreed with every statement, but when prompted to commit to executing a better design, they were unwilling to give up the familiar harmful behaviors.

criticallearner, I've seen this time and time again over the years.

I had exactly the same situation with a migration from Oracle to SAP. The programme manager (who understood the limitations of training in this situation) and I presented an ePSS solution to the executive team responsible for the migration. We thought it was going well until one exec. team member (a global director of operations, as it happens) said 'so when this is done, will we get the real training?'. At the time I thought he was either foolish or ignorant. In retrospect, maybe his drug dosage was just too high. Whatever, I estimate he cost the company at least a 6-figure sum.

I guess there's no underestimating the power of bad habits – nor the stupidity of people who think that because they've been to school and college they understand how adults learn.

One way to address the apparent lack of ability to think and act outside the 'training' box is to ask (or demand) that some data is collected on the effectiveness of the training – analysing helpdesk calls, surveying users 4, 8 and 12 weeks after rollout to get feedback on the usefulness of the training and whether they think some form of ePSS might have helped them get up-to-speed faster, measuring the total cost of the training etc.

This approach may not help on this rollout (if minds are already set on training) but it is a brave or stupid manager or project manager who demands training in the face of data from the last rollout in your company which shows that the return is likely to be very poor at best, and negligible at worst – which it surely will.